Building Endpoint Performance Metrics

Many organizations today still struggle with hardware refresh, right sizing hardware specs, and understanding the performance impact of tools and software across their fleet of end user devices. This has been a problem since the beginning of humans incorporating technology into their professional lives. In my 25+ year career I don’t think I have ever had a job where these problems were considered solved, and I don’t think we ever got close to solving them. A major factor we have all faced while trying to solve this problem is the lack of data compounded by the problem of being able to use and leverage the data in a meaningful way.

Here at Snowflake I feel we have just met our first milestone at solving this problem, but to be clear we still have quite the journey ahead of us to fully solve these problems. We have just launched our first version of a Streamlit app that contextualizes the data we collect in a meaningful way to tackle these problems.

Common Struggles & Problems

How much RAM does a developer need? How much disk space does a video editor need? Will a MacBook Air have enough compute power for folks that do mid to high level workloads on their laptop? When is a good time to refresh end user hardware to employees? Every 2 years, 4 years, maybe 5 years? The answers to all of these questions will be relative to your Org and how your Org uses technology. There isn’t a magic answer that we can just ask for that would be guaranteed to fit all of your needs. We can, however, create frameworks and metrics with data and start iterate over these things to try to find those answers.

I have had jobs that allowed for a 2-year hardware refresh, other jobs it was 4 years. I even had a job one time when it was 5 years before you could get a refresh. When looking at the cost of this, you also have many ways to address the cost, and the potential cost savings. If we take a $3,000.00 laptop, add the cost of required software, and any additional costs (extended warranty, peripherals, etc.) and then divide that by the number of months and get the cost per a month for that employee. So let’s assume the following costs and timelines:

- Laptop: $3,000.00

- required Software: $150.00

- Required Software = cost per a license of required software (agents, apps, etc.)

- Extended Warranty: $150.00

- Hardware Refresh Time: 3 years

This would be a total of $3,300.00 over a 36-month (3 year) period. Thus, it would cost approximately $91.67 a month to provide that laptop to that type of employee. If we adjust the timeline to 4 years for a hardware refresh that lowers the monthly cost of that employee’s laptop to $68.75. Which is a cost savings of $22.92 per a month. Now lets scale that out to an entire division of employees doing similar type work on their computer. Let’s assume a large department of 2,000 employees are evaluated at this scale. That $22.92 per a month savings turns into $45,840.00 per a month, or $550,080.00 a year.

How do we ensure in IT and in Procurement we are making the right decisions that balance user experience, productivity, and trying to keep costs down? We will want to use data for this, otherwise everyone will just be guessing. A half a million dollar savings by extending hardware refresh one extra year for 2,000 employees may look fantastic on paper in regard to overhead costs, but does it impact the employees in a way it hinders their productivity? Will that impact the organization in a negative way and hinder the ability to produce end results the Org wants? What if adding that extra year also adds tons of support costs, and increases labor demand of the help desk and IT support? If you aren’t collecting these data points you simply will not know.

There are many ways to calculate total costs, this was just an example that we toyed around with. There are always many other factors to figure out, and each Org will approach those differently. The above was merely an example and not necessarily one we use, or will use. There are more discussions to have and more data to analyze to find a final solution.

Getting Started with Data

We knew this would be a long journey to get to this point, but before we could start the journey we had to build a plan

with a roadmap to get to our final destination. This all started a couple of years ago when we decided to onboard

FleetDM as our main tool to deploy and manage osquery. We wanted a good

cross-platform data collector tool we could easily ship data to Snowflake with. FleetDM met our needs and at the time we

were only interested in the osquery management itself. So, we started with setting up FleetDM, creating queries and

osquery configurations, and a kinesis data stream to Snowflake. This took some time to POC the product, pilot it,

then scale out the deployment across all departments. We also did a lot of slow moving testing to ensure we weren’t

going to hog-up the available compute with this tool on end user devices.

During this time when we were building and scaling osquery our leaders wanted to build some sort of Laptop UX score

with data, similar to other UX scores we have created here. So we started designing performance metrics queries and

data models, we rolled them out, tested them, monitored them, then we finally created data models and shared those

data models with our data science team.

If you are unsure on where to start, here are some ideas you can research to see if this could be a good fit at your Org.

First and foremost you will need data collection. I will state, that in my professional opinion, MDM is not a good tool for

this. MDMs cannot collect inventory data at a high scale and frequency. Also, MDM products don’t have data streaming

abilities for the most part (if some MDM’s offer this please let me know). Lastly, there is no real good cross-platform MDM.

I wanted to avoid having to deal with multiple tools, with multiple different ways to collect data, which downstream would

result in likely more labor to data model each different data pipeline. We also have to deal with Linux here, which also

tosses another complexity in the grand scheme of things. This is what made osquery a pretty easy answer to solve these

data collection problems. So, I would strongly suggest you plan for all platforms you have at your Org, all the data

collection frequencies you think you will need, and finally you will need a destination to ship all this data into some

sort of data platform. Snowflake is an excellent choice for this.

Developing Data Collection Tools

With metrics data you must find a balance of collection frequency and what data you collect. If you were to collect data on compute say once or twice a day, that isn’t very meaningful. Likewise, if you collect data every 5 minutes that is likely going to cause overhead in many areas, and could also impact user experience. So, you will want to balance this out in a way where you can collect data at a meaningful frequency, but also not get too aggressive to impact end user experience on their devices. There isn’t really a magic number I can give anyone, and it will be relative to your goals. However, we can share our experiences here and hope that it may help others figure out what works best for them and their Org.

We collect data at various intervals. Some data collections happen every hour, some every few hours, some are just once a day or perhaps a few times per a day. When it comes to running processes and their resources you may want to take more frequent snapshots to get better telemetry on the datapoint you wish to track into a metric. For example, battery capacity data we collect every hour as an end user on a laptop can be on the go throughout the day. They will dock their laptop, plug it into the AC adapter to charge it, and of course they will run it off the battery while running to meetings or collaborating with others in person. Due to the constant fluctuation of states the battery can be in, we do want to collect this specific data point at a frequent enough level that it is meaningful. If we collect battery capacity data in a laptop once or twice a day that is not very meaningful, and it reduces our chances of capturing data throughout all the different states a battery can be in.

In FleetDM we set up labels to mark devices as early development testers, pilots groups, and the rest of the population.

We use this as a scoping mechanism to performance and impact test queries from the development cycle, the stage cycle and the

production cycle. Queries go through this process to test impact of the query, and we leverage FleetDM’s performance impact

metrics to determine if a query has anything over a minimal impact on end user devices. We also have a data ingest

that monitors osquery’s deny list. This is when the local watchdog denies a query due to the host already in a busy

state running other queries. These type of things should be considered, developed, and in place before you start to

deploy queries to your fleet of end user devices to mitigate impact.

This type of work does take time and effort. My team spent about an entire year developing queries, testing queries, optimizing SQL code to reduce compute costs, and then slowly rolled them out to our fleet of laptops over time to collect data. We also needed a decent chunk of historical data to trend over time. Historical data in this type of project is quite valuable. You can then build daily, weekly, monthly and so forth performance metrics. If an end user device shows poor performance for 1 week out of an entire quarter, you now have that data point to investigate. Perhaps it was a bug in the software you deploy, or an unoptimized configuration. Perhaps it was one my favorites, a race condition bug! Which are difficult to reproduce and annoying to diagnose and troubleshoot. In contrast, if an end user device performs poorly for the majority of the quarter that is a better signal that the device could have problems, or perhaps should be prioritized for refresh.

So if you go down this road you should set some expectations for yourself, your teams, and your Org that this will take time, and you will need to collect data constantly to build meaningful metrics out of it.

Data Collection & Data Modeling

One of the struggles we had in the beginning was that there are a good number of blogs and online resources that use osquery

to track computer performance, but they lack in substance, and they don’t really deep dive into the subject. Also, modern

Apple hardware has changed a lot which makes this also very complex. Which I will touch on later. So, we did a lot of

research and development with these queries. Even when reaching out to the community online I found not many folks

were really doing a lot of this type of work. So, after looking at a lot of security and IT/Ops blogs around osquery

we borrowed some ideas and added our own. This is another reason that this type of work takes time to develop. It seems

that is not a very common use of osquery, or perhaps the folks that are doing these things just don’t share what they do

in the public space.

A query to track macOS compute:

|

|

I did have to chat with some folks in the official osquery Slack to get this query to work how we wanted it to. I also

decided to join the cpu_info table to it, so I could get make and model of the CPU. It also allowed us to get how many

CPU cores an end user device has.

The raw output of the query looks like this (truncated):

|

|

The above snip of JSON output from osquery is just one process of about 12,000 lines of output from the query on a single

host computer. Luckily in Snowflake we can model this into a view which turns the JSON output into relational data. The

JSON output does have a caveat with it. If you look at the FleetDM docs on

the processes table, you will see it supplies the data types for each data value. This is great, because we want to keep

the data the same in the local SQLite database that osquery uses in Snowflake. This reduces error, helps contextualize data,

and of course keeps it the exact same as the tools that collect the data. However, the JSON data itself pretty much only

uses strings. Not all data will have values when collected, and thus end up as a blank string. Well, when you type cast

a blank string to say an integer, it will fail. Simply because you cannot cast something to a different type if there is

no data to cast. So, we had to do a bit of extra work in our data models. This can be easily fixed in Snowflake and I have

blogged about this previously.

Let’s look at a data model:

|

|

To get around this and keep the data types the same across the platforms and while ingested into Snowflake we took the

approach of using NULLIF. This approach essentially

allows us to NULL a blank string value if we are unable to type cast it to the proper data type.

When you start your journey around this type of data collection you will want to spend time to fully understand what the data is, and to model it in a way downstream in Snowflake or a product like Snowflake so other teams or applications can use that data in a more meaningful way. This is another factor that just takes time to develop.

osquery that may end up being too “noisy” on the client. We can do this because we ship all osquery results

to Snowflake and do all of our data modeling and joins downstream in our data platform.Building Metrics

My team has partnered with many other teams internally to build this Streamlit App. We also have asked teams we work with closely, like the IT Support Org, to also look at the app and data to ensure it makes sense and could be actionable. The CPE team here worked closely with our assets team, data science team, along with leadership to ensure we were building something that could be effectively and leverage our data.

When building a metric there are many ways to approach it. Perhaps, there are actually too many ways, and this can be confusing and likely take time to figure out. You could copy the idea of a “credit score”, or simply pick an arbitrary range of numbers to score the metric on. For example, you could score a metric between 1 and 10, with 10 being the best possible outcome and 1 being the worst outcome. How you pick your number range to score your metric isn’t necessarily important, but if you are going to build series of metrics it would make sense to be consistent across the board. Our teams here have already build service desk metrics, and have opted ot use a 1 through 5 scoring range. To keep the metric consistent we decided to also use this range.

Once we established our metric system we started looking at scores. Our asset team would reach out to people that had specific score ranges. We also decided that a 5.0 was really not obtainable as it would just be a completely idle computer. We expect every computer to have some level of load on it, and for end users to consume some level of compute no matter what the tasks being done were. Our asset team reached out to folks in various ranges and were receiving feedback. We took that feedback and correlated it to UX scores. We discovered that a vast majority of devices that had under a 3.0 score the end user would always report performance issues. The data did back this up. When we reviewed the data, we could observe higher consumption of CPU and RAM and less free resources on these devices. We also had historical data, so we could also observe week over week, month over month and even quarter over quarter the performance metrics of the device. This helped us contextualize the scores.

I would like to take a quick moment here and point out a few things. While our early established baseline for a healthy

device would be anything over 3.0 or 3.25 we are still working through these correlations of scoring to actual performance.

So, this could change, and we are open to it changing over time. This is the approach one should take when building

something like this. You will need to collect data, run analysis, iterate over the data and improve it over time. We

could discover later on that a 2.75 score is really the bare minimum a laptop needs to be considered performant, or it

could go up to day 3.5. We also plan on iterating over the app, adding in more data to contextualize the overall

scoring metric system, and improving things as we go. For example, we might start to add ticket data to each asset where we

track how many support tickets a specific device generates. This could help us discover a device that has intermittent hardware

failure, or is just a good old-fashioned “lemon.” The main takeaway should be that when you start to build these type of

systems there is always likely going to be iteration and adjustment over time.

Data Collection Points

We have decided to collect some specific data points for the fleet of both Windows and Mac laptops we manage here. This was our starting point to gather enough data to make a meaningful metric as our output. We will likely expand on these over time, and I would also expect us to improve on existing data collection and telemetry.

Our starting points:

- CPU usage

- RAM usage

- Disk I/O

- Disk Utilization

- Laptop battery health

- Number of running processes

- Department and Cost Center comparisons

- Hardware specifications

Each platform is different, so you likely won’t be able to develop a query in osquery that can track every statistic

across Linux, macOS and Windows. You will have to split some of the data collectors up by platform they are collecting

against. Likewise, when collecting CPU usage data, it is also very nice to include the make and model of the CPU along

with how many CPU cores the device has. This can help provide more context to your data.

We also included Department and Cost Center comparisons in our app. This allows anyone who is analyzing the data to quickly compare what employees have what metrics within the same group. Since the same group of humans are likely doing some overlapping work, and possibly almost the same work across devices. This will help us profile say a sales rep versus a software engineer’s needs when it comes to hardware specification. If 90% of a Cost Center has acceptable scores with the same spec laptop and 10% have unacceptable scores we can use that as a starting point to investigate why. Is that 10% doing slightly different and more demanding work? Is that 10% of devices experiencing some sort of software or hardware issue? Since we will not be able to really track every aspect of how a human uses a computer, we can group the data together to help us contextualize it in a way that makes it more actionable.

Apple Silicon Hardware Caveats

With the introduction of Apple Silicon, we have observed Apple bring in an entire new level of performance to our beloved Apple laptops. While the advancements are definitely something to marvel at, they do introduce new challenges. With the advances in Unified Memory Architecture a lot of the classic memory performance metrics aren’t as applicable. Compound that with the fact that the kernel can now compress and swap memory on the fly between processes, this makes tracking memory usage somewhat challenging.

Apple has moved over to a stat they have developed called Memory Pressure, and it is now present in Activity Monitor.

There isn’t a great way to track this statistic from a binary, nor from osquery either. There is a binary to simulate

memory pressure, but that is just to simulate it, not collect the data on it. So, until Apple provides us a more meaningful

way to collect memory pressure from an IT tool, we must use what we can collect today.

Thermal sensors are in a similar boat. In the older Intel based hardware you could query the SMC controller to get actual

temperatures of an Apple device. In Apple Silicon this has moved to Thermal Pressure, and there is a way to collect this

from a binary, but osquery doesn’t have a supported table for this yet. We cannot really use MDM to collect this data since

we will want a faster frequency of data collection and historical data to trend it into a meaningful metric. So, Thermal Pressure

is a “future us,” problem to solve at the moment. We will circle back to this one when we are able to find time to do so.

Looking at the Data

If you have read this massive wall of text blog post, I applaud you! Now we can look at some visuals and go over them.

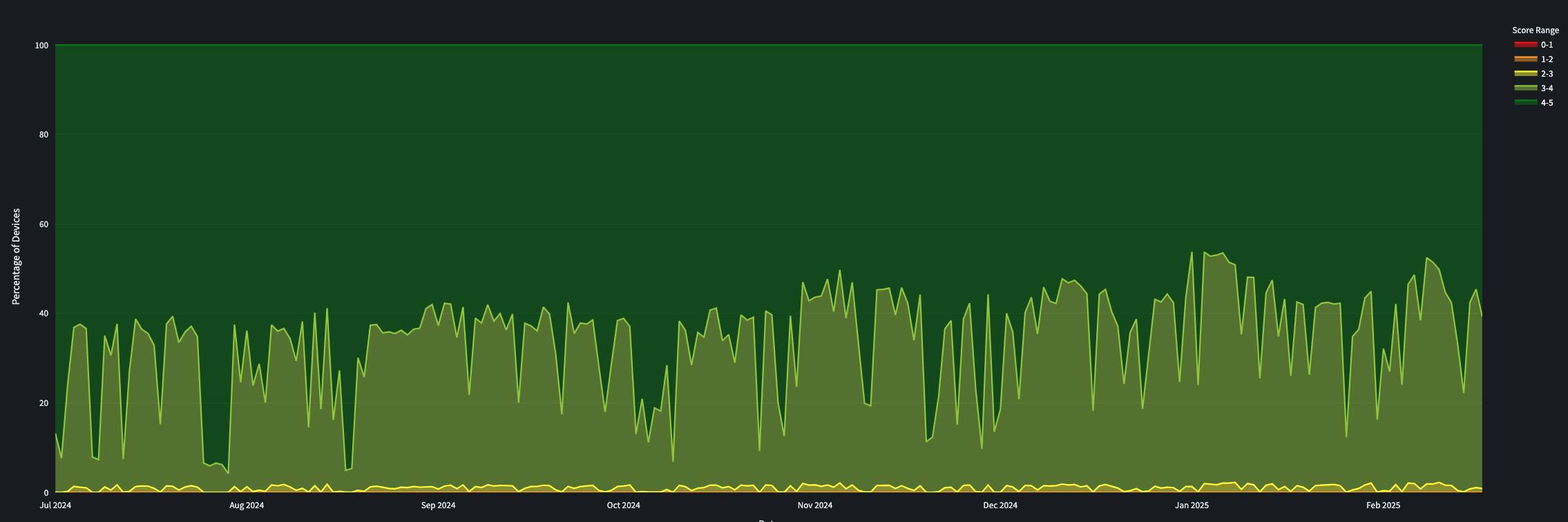

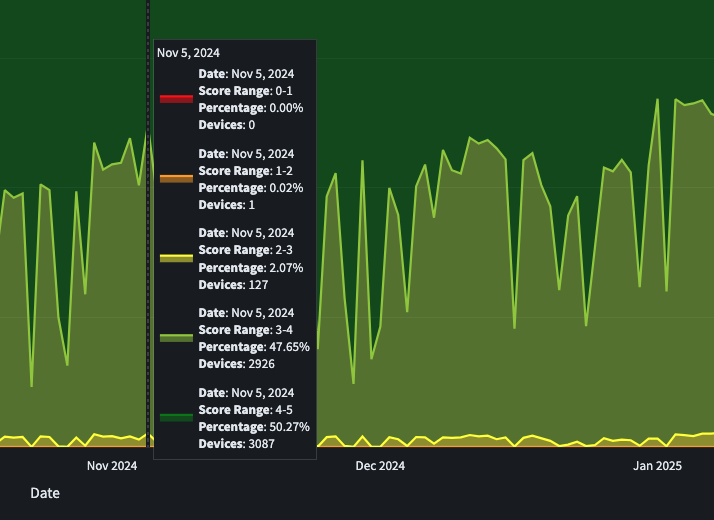

The above visual shows that a majority of our systems are scoring in the 4.0 to 5.0 range, and the secondary majority

are in the 3.0 to 4.0 range. This aligns with our initial assessment of anything under a 3.0 should be investigated

for hardware refresh, as well as any IT troubleshooting to help optimize user experience and performance of an employee laptop.

This allows us to quickly visualize an overview of Laptop UX Scores and since it was built in Streamlit we can interact with

the visuals to get more information.

In the above screenshot we can observe the peaks and valleys of performance of our fleet and get a quick overview of the groups of scoring ranges. This is to be expected as computers get used differently throughout the weeks and months by the humans that use them. We could also guess that near end of a quarter compute usage might go up as everyone is trying to close their end of quarter projects up, so they are ready to tackle next quarters. If that guess is true, we should be able to observe this in the data. The key is to let the data guide you, and while you can make assumptions ensure that you are also in a position to accept if your predictions are wrong. The data will tell you the truth!

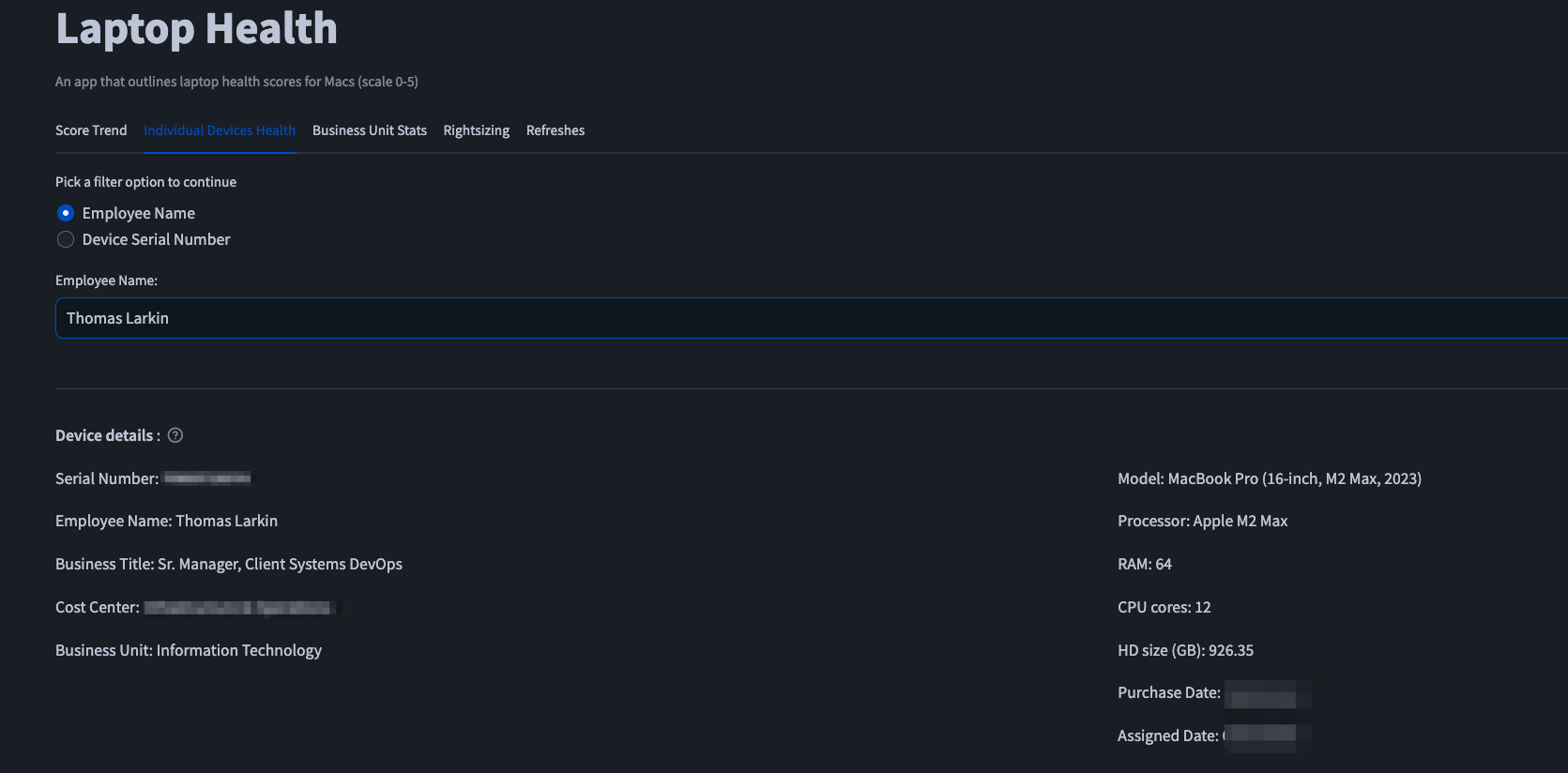

We can also look at individual devices and get a feel for their current state of performance:

This is my laptop, and we can see it displays some metadata about the device which allows us to quickly view it to get an idea of overall hardware spec. We can also use this data to do device to device comparisons at a cost center or at a department level to compare a laptop to its peer laptops.

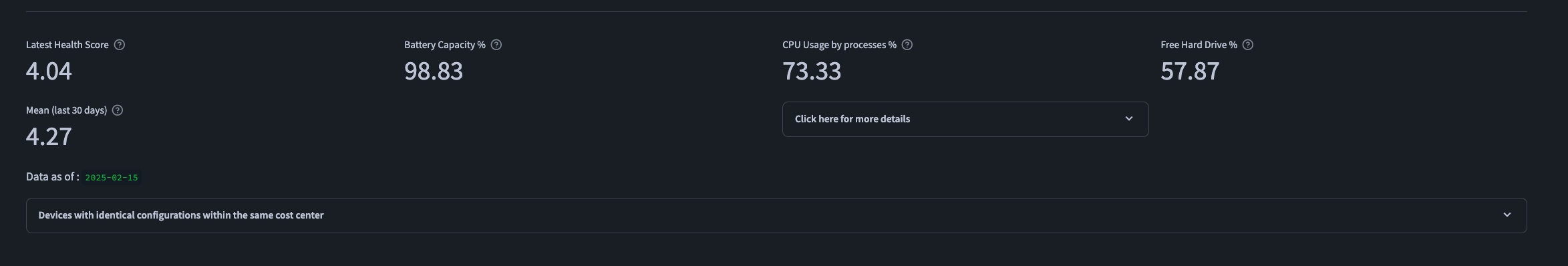

The above image gives us the daily snapshot of data along with the last 30 days mean. This allows a user of our Streamlit app to quickly view the latest data of any given laptop in our fleet.

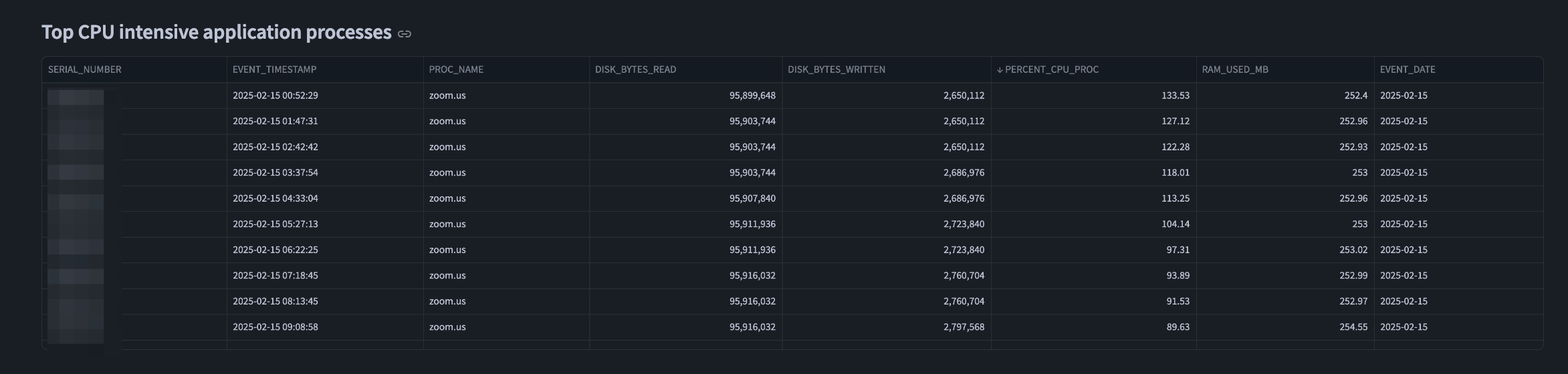

If we scroll down the page, next we can view the CPU intensive apps. Admittedly this is where my device shows that I have transitioned from an IC to a Manager, as a lot of my time is spent in Zoom meetings. However, this is very useful when looking at an end user’s computer. While we typically see processes like Chrome often the leader of compute consumption, we can also observe individual apps and processes that may also chomp at a computer’s CPU. Think things like security agents, or IT agents we deploy to our fleet. We can now observe when they are in weird states and taking up more CPU than they normally do.

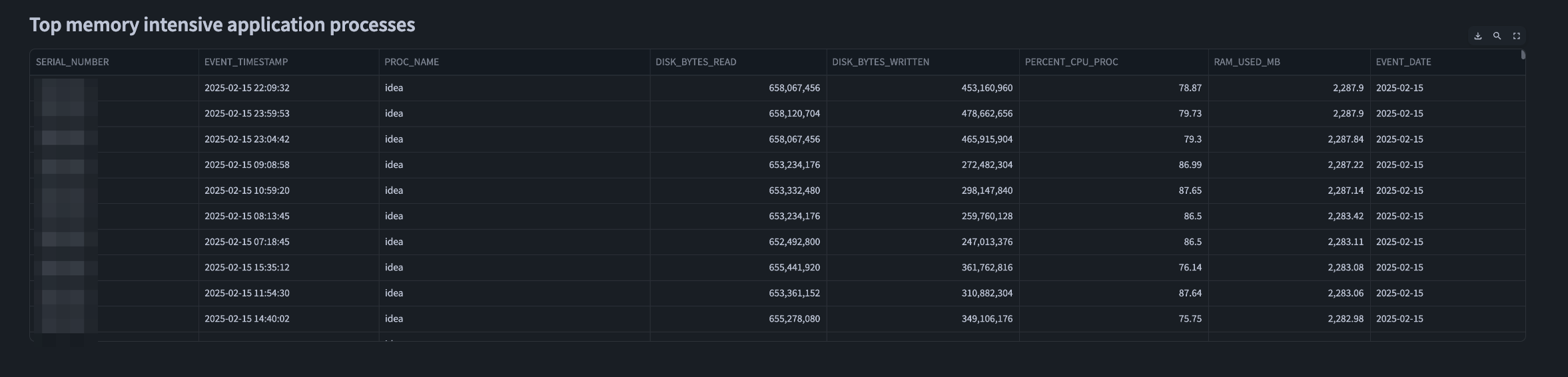

Then if we keep scrolling down the app looking at a single device we can observe the processes that consume the most memory. This is where I can “prove” that I still do some IC engineering work as my most intensive memory process is actually my IDE. As I still write code from time to time, test code, and other software development and coding workflows. So, my IDE is eating more RAM that Chrome for once. Typically, when I look up my devices Chrome (or other Chromium browsers) oftentimes take up the most RAM and CPU of my devices. This is also common across our fleet as well.

Conclusion, Benefits & Roadmap

I cannot think of a single job I have ever had where we had this level of data telemetry and so many options to make that

data actionable. I also can recall many times at past jobs working with leadership, procurement teams, people who volunteered to test

hardware and provide feedback, and so forth all got together to plan for what hardware spec to buy and what refresh lifecycle

we would use. To be honest, it was a lot of guess work, and with minimal amounts of data being used back then. The great thing about

Snowflake, FleetDM, osquery, and apps like Streamlit is that they are available to the public. This means any org that wants

to build this type of tech stack can. I do have friends at other big prestigious tech companies that have data pipelines and

have built custom in-house solutions that likely accomplish similar things, but those solutions they have built will never

be publicly available.

The benefits are there as well. You can use data like this to project cost savings initiatives, do data driven hardware refresh, use the data as way to drill into devices that are preforming well versus those that aren’t. So, IT support can look at this as a way to be proactive on devices that are declining in UX score.

For the future we will want to collect data, assess it, iterate over it and then improve it over time. This will allow us to expand the application’s features and enhance our data collections over time as well. This is definitely not a once and done project, but rather a once and sustain forever. When approaching these types of projects it is highly beneficial for you to really think long term what your roadmap is going to be. I always suggest to start with a minimal viable product and get that into the hands of your stakeholders. Then get feedback from them to iterate over. These type of projects and data applications are highly likely going to have multiple use cases to multiple teams, so it is not a crazy idea to think you should plan long term for stuff like this.

I know this was likely my longest blog post ever, and when I tried trim it down to fewer words it just felt it was missing context. If you actually read this entire thing I thank you and am grateful for your time!