IT Teams are also Security Teams

IT Teams are also Security Teams

If you have worked in some tech related job odds are you have also worked with various security teams throughout your career. There has definitely been a divide between IT teams and Security teams I have observed in my near 25 years working in tech, but that doesn’t have to be the case. During my career I have definitely observed security “punting labor” over the fence to IT teams, and I have seen IT teams dig their feet into the ground when security wants to change things. This is just the wrong way to collaborate with IT and Security teams all together.

I am very lucky and privileged to have at least been able to work with some passionate and collaborative security teams in recent years. When you approach the relationship of IT and security this way you get such a better return from every team’s work, and you find yourself working with security teams on a constant basis to deliver solutions to your organization. I have also been lucky enough to be mentored by some of our security leaders and engineers over the past few years in my current job. So, here is a good old blog post about how IT and Security should be best friends!

Engage as Partners

We have a security partner program here at my current place of work. I am also a security partner, as I possess some expertise and skills in some specific tech domains. This allows me to apply that cross functionally, and help enable other IT staff to be better at security, and also help security bring domain experts into their consultation. It is unrealistic to expect security staff to be domain experts on your tech stacks. Likewise, it is unrealistic for security to tell IT how to design and scale systems. This is a generalization, so don’t think this is a binary and absolute thing, as it is not. However, the generalization is the conceptual point I want to make here. Let the experts be experts, and work with each other from a partnership point of view. This is easier said than done, but one pro-tip I can give everyone who reads this post, breaking bread with other humans is a great way to start. One of our security leaders started bringing food to our meetings, and he would ensure we all had our snacks, and we chatted over food. As cheesy as this sounds, it worked! We were more relaxed, we got to snack on some food, we started chatting more casually about things, and we agreed to work toward common end goals.

So, make a security partner program, train the security partners on how to be more security focused, because security is not just one department’s job, it is everyone’s job. It is very unrealistic to think that one department can cover every single risk an organization encounters on a daily basis, thus we do things like end user security training, partnerships, sync meetings, and so forth.

I volunteered to be a security partner about 4-ish years ago, and have helped many folks across our org write threat models. I have been able to apply my work experience, my expertise, and my all around systems’ knowledge to this security partnership.

Threat Model Your Tech Stacks

This one can be controversial, but I have personally come to terms with it a few years ago. When I was a Principal Engineer designing systems at scale, I was the expert designing them. I knew the tech’s features and abilities, I was best qualified to describe how the system worked, and how data flowed. Which is the bulk of a threat model. I also think that if you are in a position to design a system, the way you qualify yourself to build it, is if you can threat model it. I know that sounds a bit harsh or maybe absurd to some folks, but hear me out please. The designer and implementer will have the most intimate knowledge of the system, they should be able to describe how their tech stacks work together. If they cannot, maybe they should learn how they operate before building it in production. That is why we have POC and test environments, and why we run pilots, so we can get to know our tech stacks well before we ship them to production!

I have learned how to use STRIDE, which is a threat modeling framework. It is honestly not too difficult to understand the bits you need to once it finally clicks! While that is true of most things, at least this is a framework, so it is very repeatable once you learn it. The table below abstracts what S.T.R.I.D.E. stands for, and defines each threat to model in your tech stack.

| Threat | Desired property | Threat Definition |

|---|---|---|

| Spoofing | Authenticity | Pretending to be something or someone other than yourself |

| Tampering | Integrity | Modifying something on disk, network, memory, or elsewhere |

| Repudiation | Non-repudiability | Claiming that you didn’t do something or were not responsible; can be honest or false |

| Information disclosure | Confidentiality | Providing information to someone not authorized to access it |

| Denial of service | Availability | Exhausting resources needed to provide service |

| Elevation of privilege | Authorization | Allowing someone to do something they are not authorized to do |

As a systems designer, you should be able to data flow diagram (commonly referred to as DFD) your tech stacks and define each threat throughout the DFD. Each threat should have a mitigation or compensating control. IT and Security teams should walk through the threat model together so they both better understand what is being published to production.

Building a Threat Model

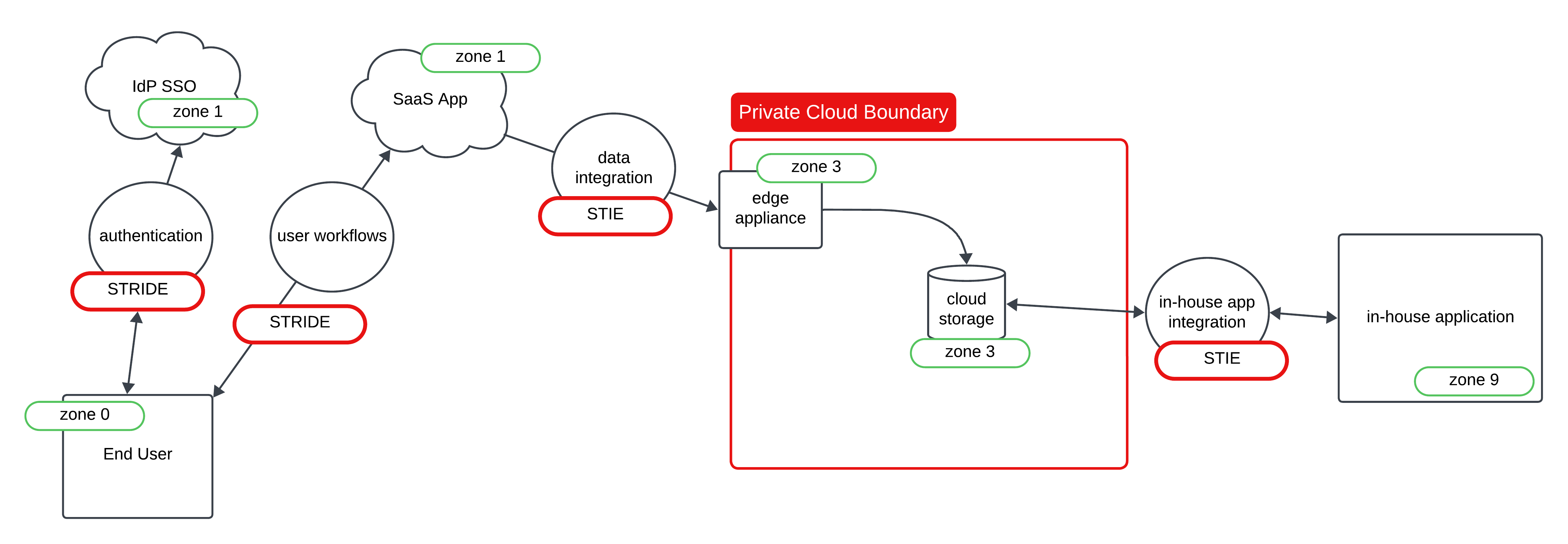

Let’s assume we have a simple integration we are building from a SaaS application into cloud storage, where our in-house application we have built will access the cloud storage data to do the thing it was designed to do.

A simple DFD:

Quick notes on the DFD:

- Entities are defined by the square like shapes (may not be exact squares, could be rectangles haha!)

- Processes are defined by the circles (this is to show what actions or workflows are taking place)

- Label each threat with the corresponding letter in S.T.R.I.D.E.

- Label the trust zones (will explain below)

- Take the DFD and some form of notes describing the diagram to consult with your security teams

Trust zones were something that took me a moment to really get what they are meant for. They are arbitrary numbers you assign a value to, and it is more of an offset than it is a scale. Assume trust zone 0 is the absolute lowest trust zone, and trust zone 9 is the absolute highest and most trusted of zones. What you are wanting to represent in your DFD is that you are either going up in trust zones or you are going down in trust zones. Typically, security teams will have some baseline definitions of trust zones like the end user is always in trust zone 0 no matter what, and only systems we fully design and build are in trust zone 9 as an example. I used this example in the DFD above as well. SaaS apps you do not own nor do you have direct access to the host, so it is a bit of a mystery box to you and your org on what goes on in the app. However, you likely have some level of admin access and some level of logging, and they aren’t an end user so to remain relative to the flow I marked them as trust zone 1. As we can get some data, and we have some control out of the SaaS app.

The private cloud I marked as a trust zone above the SaaS apps as we have more controls in this space. Things like, but not limited to: IAM, role assumption, RBAC, permissions, and other various forms of protections and limitations we can impose to follow the least privilege model. While the in-house app is something we can put in the highest trust zone, zone 9, as the company owns the code, employs the devs that built it, and essentially has full control over it. You can just as easily bumped the SaaS apps to Zone 3, the private cloud to zone 6 and the in-house app would remain a trust zone 9, because it is all relative to the model. It still conceptually makes sense. This was a big hang up for me in the beginning of learning how to threat model. Now that I know these are arbitrary values to show a relative movement in trust zones (going up or going down) it is much easier for me to grok.

When changing a trust zone, an information disclosure threat should be automatically generated. Which is the I in

S.T.R.I.D.E. This is because traffic of your data is transversing through various systems you have varied control over.

So, some standard concepts should be defined by your security team you can follow, and then you just need to apply the

relativity of your tech stacks through the DFD.

Use Your Systems Knowledge to Describe Threats

One of the ways we have described the threats is a syntax called Gherkin, which uses some specific words to describe a threat to each scenario or process. It goes something like this:

|

|

I will admit, that is not my best work above when writing out the threat and mitigations, but I tried to keep it generic

enough that it makes sense. So, this would represent the Authentication process in the DFD. You would then go through

each process in your DFD and use the above syntax to describe your threat. You don’t have to use Gherkin to do this and

Gherkin is more of a framework than an authoritative language. You can bend it to your preference to use it in a way that

it makes the most sense for your Org. Also, the description covers each letter in S.T.R.I.D.E. used.

Another pro-tip to fast track this, is that some common threats like information disclosure can oftentimes be mitigated by enforcing TLS/HTTPS traffic. Which really almost everything nowadays does this. So, you don’t need to overthink each threat.

I also find Google Docs to be a great way to actually describe the threat model as well. Simply because a Google Doc is a living and collaborative document. You can tag folks in them, you can assign tasks, and so forth. So, something like Gherkin isn’t even required, but it is something I learned, and I feel it has value if you are looking to have a bit more strict standards in your threat modeling.

Using the 80/20 Model

Threat modeling should follow something around the 80/20 model give or take. Where you are expected to cover about 80% of the data flows, processes, and threats. If your security wanted to force 100% coverage on a threat model, then nothing would get done in a timely fashion, and you could spend weeks or longer chasing down every edge case. You can focus on getting about 80% of the threats covered up front and the last 20% as you go through the process, or after you go to production. No one wants to block folks from shipping product, or block them from doing anything that impacts the business in a negative way. So, both IT and security teams should work together to streamline this process.

Building Security Minded Development

The real end goal of something like this, is to get every individual to think about security when they are designing systems. Doing your own threat models helps this cause, and this is probably the biggest reason why I support the concept of having security partners, having IT involved in threat modeling, and having implementers threat model their own tech stacks. In the end, if done right, this can transform an entire organization into a security driven one, and everyone benefits from this. I do honestly believe that this process has made me a better engineer. It has made me think more and more about secure design, and honestly about convoluted workflows. If something is way too complex to threat model, then perhaps it is just too complex to even do. That is a sign for me personally to pivot, and look at accomplishing my goals another way. Not because it is too difficult to do, and I want it easier, but rather with complexity comes a ton of other problems. The threat model process sorta surfaces things like that.

CPE Teams are Security Teams

If you work in the CPE space (Client Platform Engineering), I would argue you are already working in security. A lot of what a CPE does is around securing the end user devices, reducing risks with the end user devices, and acting as a front line defense on end user devices. I believe CPEs are also more aligned with software engineers and DevOps engineers than compared to the classic Sys Admin. As a CPE team member, you can leverage your coding skills, GitOps, and automation pipelines to really help secure an end user device at an organization. So, CPE teams are already doing security work, building threat models for their automation systems and infrastructure should not be too difficult given their expertise on building and designing said systems.

It Truly is a Community Effort

That age-old saying, “It takes a village,” is very applicable here. Security will need to work on building an internal community around enabling teams to be more security driven. Security will also need to listen and take feedback from those teams to ensure there is actual community and collaboration happening. Just like IT needs to also put forth their effort in security as well. Once you build a culture of collaboration and community, it makes the debates easier to have. Bonus if your org has lots of data, because you can use data to help drive these conversations and help make decisions.

So, in the end we all work together and are on the same team, and we need to act like it.