Managing Licensed Software with Data

Turn an IT Cost Center into an IT Cost Savings Center!

So many times in my career I have heard IT/Ops teams be referred to as overhead. I have also heard IT being described as “just keeping the lights on,” and all the adjacent things one can say similar to this. These type of statements has always made me want to prove that IT isn’t really just those type of things. I have always been trying to implement some sort of cost savings where we can definitively decide where to cut costs. You never want to cut costs around things that have a high positive impact. Since in many ways you do get what you pay for at times, and what you pay for may be very worth it. So, IT is a cost savings center, a service provider, an enabler, an enhancer and much more than some of the stereotypical things you might hear about how people perceive IT.

How many times have you implemented a software stack that was mid to low bidder range over the more expensive stacks and regretted your purchase months and months later? How many times have you just guessed at how many licenses you need during a true up? Are you buying the right license bundle? Companies like Adobe and Microsoft love to shoehorn you into mass license bundles for discounts at higher volumes of purchasing. How often have you made mistakes in this area and over purchased or under purchased licenses?

Previously, I blogged about how we track licensed software from ingesting the application usage data from Munki. That previous blog post goes into details on how we integrated our osquery config to consume the Munki SQLite database of application usage. This is how we build the baseline of our application usage metrics.

Defining the Problems

What we are striving to achieve here are a few simple things.

- Enable cost savings around licensed software

- Enable procurement teams with better data to forecast true ups and renewals

- Enable our asset team to build data driven licensed software lifecycle workflows

That is really it. We want to save money where we can, and where it makes sense the most backed by the data we collect. We also want to ensure that the user experience here is good. You need a copy of Adobe Photoshop? Just file a request ticket and we will automatically provision you a license no questions asked. You get the license and the access very quickly. However, if you stop using Photoshop over an arbitrary number of days, we will revoke that license and save money. If you need the license again, just file a ticket and get another license automatically. This balances a good UX with a cost savings initiative.

We want to enable end users to have access to the best tools we can provide them to do their job with the least amount of friction to get them, while also enforcing cost savings initiatives wherever we can.

How the Donuts are Made

Last month I went to India to visit our team and offices in Pune. While onsite I tasked my India side of the team to build an app that can help us make our application usage data more actionable. As a team building exercise I asked them to design and build an app that can help us solve the problem of managing licensed software lifecycle here at Snowflake. So, the team brainstormed and built this app as a team exercise over the week while I was in town. I thought this could be a great way for all of us to collaborate in person since we were all going to be in the office at the same time.

What the team built basically comes down to these specific data points joined in Snowflake and then built into a Streamlit app to help make it actionable:

- Munki application usage data

- HR directory data (email, department, cost center, manager name, etc)

- Asset data (primary, secondary, asset function, etc)

- App group membership (what users are in groups scoped to apps)

These are the only building blocks you need to get started. This should get you enough context to replicate what we have built here at Snowflake.

Note that this is for macOS data only. We do have Windows application usage data telemetry already enabled and ingesting into Snowflake, but we have not had time to implement this into our App just yet. That will come in the next release of ours. We collect the user assist data on the Windows side which tracks execution time of all exe files on disk.

We don’t really have this problem on Linux right now, but in the future if we need to heavily purchase licensed software for our Linux boxes, we will have to figure out how to track it there.

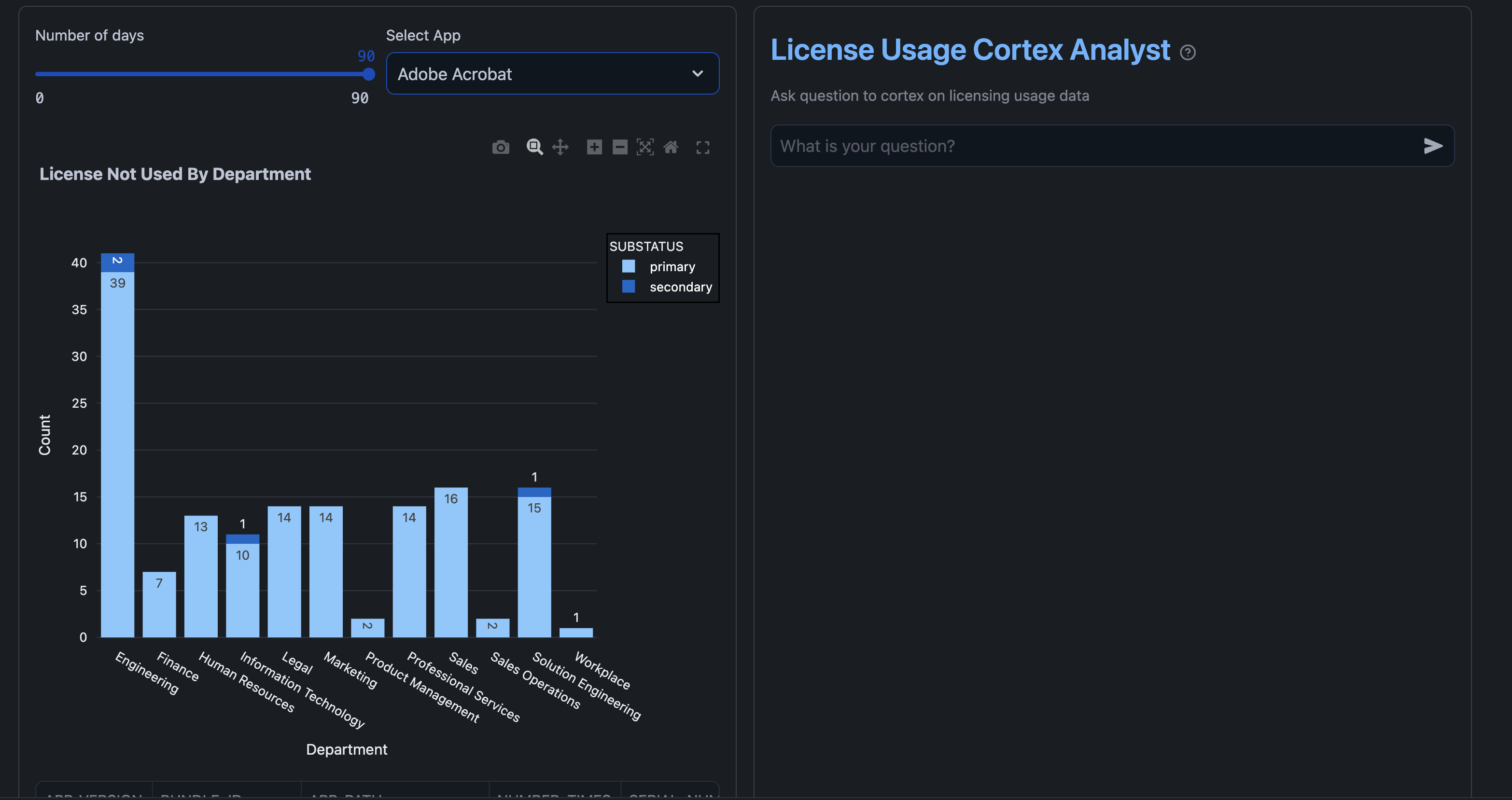

Streamlit Interface

In the above screenshot we will see all the copies of Adobe Acrobat Pro that have not been used in greater than 90 days.

If you didn’t read my previous blog post on Munki application usage data, the data tracks activations of an application.

Meaning every time you tab to an app and make that app the more foreground running application in macOS that generates

an activate event. This way we can assume every time you make an app the most foreground application you are actively

using it.

This should mitigate edge case in the data where a human has an app open running in the background but isn’t actually using it. This is me with Excel. Anytime I get emailed a spreadsheet is really the only time I ever open up Excel. Then Excel just sits there running until I quit it, or I get emailed another spreadsheet. This means I have long run times for Excel running in the background, but I honestly barely ever use it. Which is why my O365 license got retired as I pretty much only use Google Docs these days.

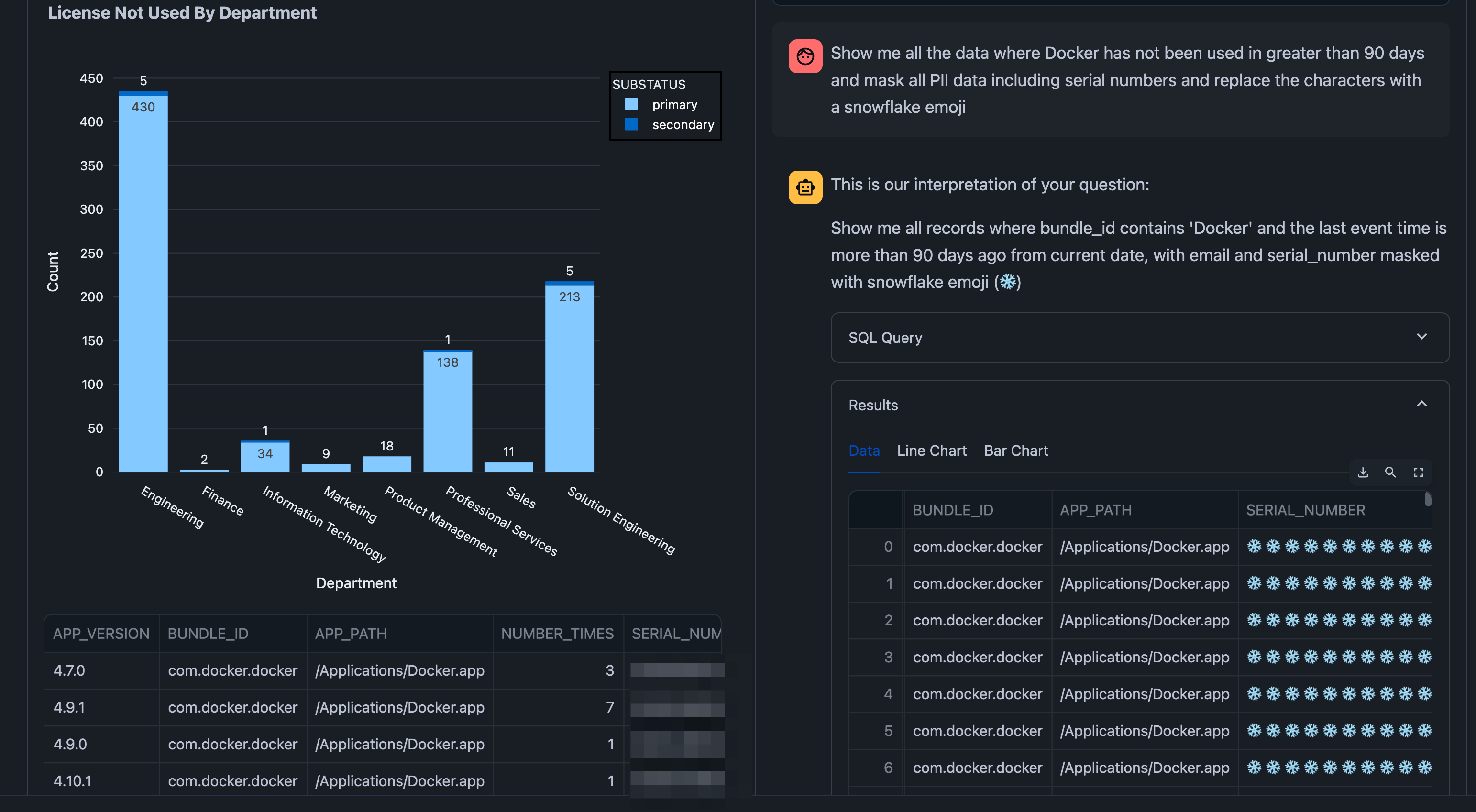

What is that Cortex Analyst?

My team surprised me with this feature. They integrated Snowflake’s Cortex AI Analyst which can enable users to query data using natural language. This will enable consumers of this app that may not have deep technical SQL/Python skills to simply ask Snowflake a question in natural language and it will generate a response.

Upon using this feature, you may immediately notice it generates the SQL code based off a prompt input by the user. This is also quite handy for those of us that do write code. I consider myself moderately good at SQL. I can write queries, CTEs, build data models, dashboards, data apps, and do generalist analyst type work. Even though my background is classically in IT, I have picked these skills up over the years of being volun-told I was the new DBA since I had “some SQL experience.” However, I can never generate a query in a matter of seconds, which Cortex can. The fact it generates the query code is great because if something is slightly off or wrong from my prompt I now have a fully functional query code I can tweak.

Lets assume this is our prompt:

|

|

Cortex Analyst in return would output a query like this and execute it:

|

|

So, it generated the above query in a few seconds. I could never write that query in a few seconds. So, from an engineering perspective Cortex Analyst can also very quickly generate syntactically correct SQL code for you to tweak if it doesn’t get your desired end state from a prompt. I also see this as a huge accessibility feature for folks who want to leverage all the sweet data we have but may not have SQL analyst or developer skills to tweak the queries on the fly.

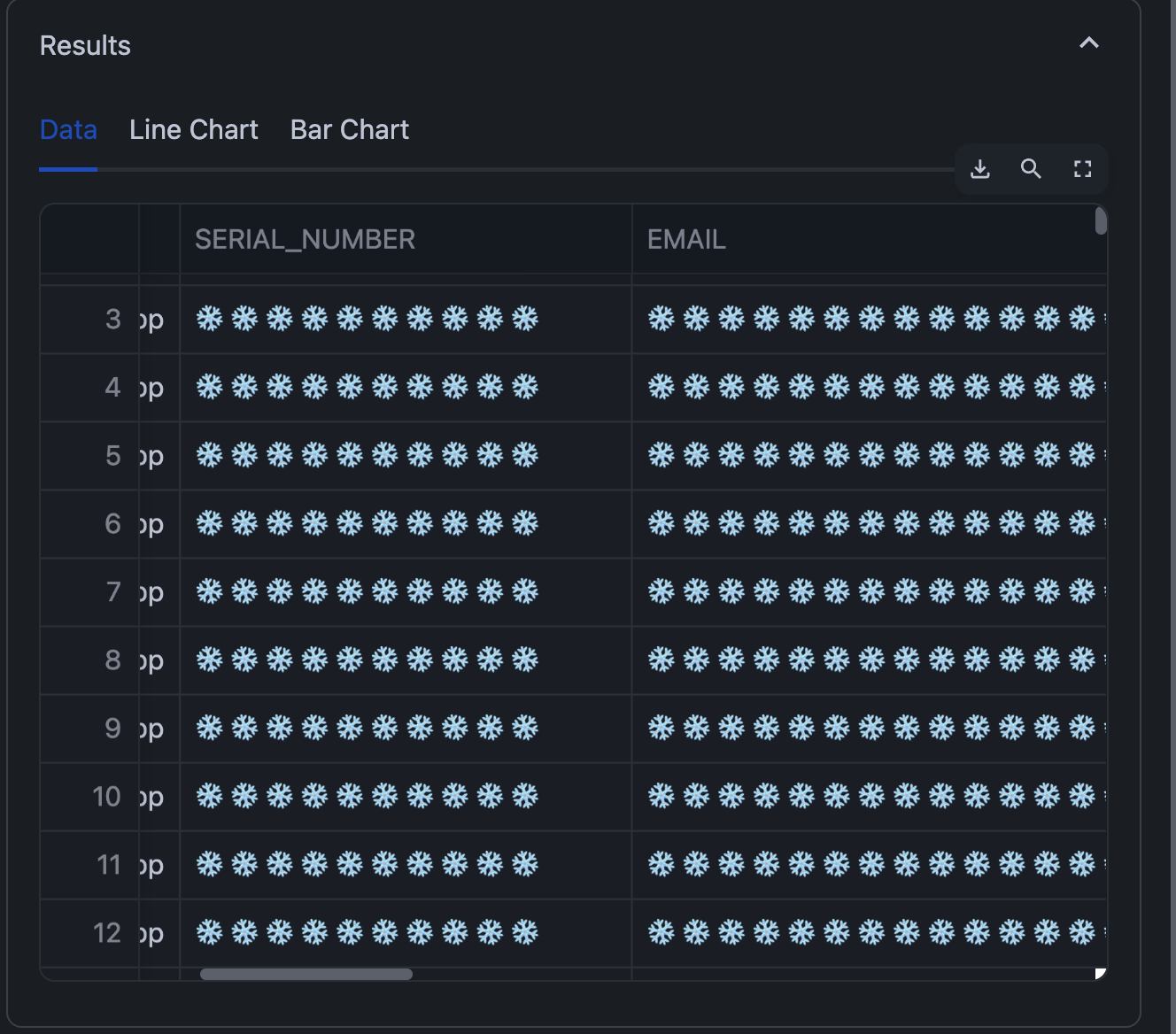

The results:

So a couple of really cool things happened here. I was able to mask the PII data with a ❄️ by simply just asking Cortex to do so. This allows me to share this data on a blog post, or even say I need to share this data with a third party like a reseller or a direct vendor. I could scrape the PII data from the query results and now this is more sharable outside my org, if I needed or wanted to share that data with someone else.

Now all the PII data is just a bunch of snowflakes!

Just the beginning

This was our first attempt and integrating AI into one of our data apps. I can already see us iterating over this and improving it as well as adding more AI features to future products. We also plan on building more cost savings tools that will be data driven in the future. My team is already thinking about adding more than just licensed software as well to this app. We want to look at also tracking Windows Enterprise licenses (OS upgrades), jamf and other MDM licensing, agent licenses, and more. So, not just third party apps end users use, but all of our licensed tools we want to eventually track and use in this app. Thanks for reading!