IT & Ops Data with Snowflake Part I

Be Data Driven in IT/Ops with Snowflake

Technology changes very fast, and it keeps getting bigger and more complex. We have so many systems these days, our systems have systems. It doesn’t have to be complex and daunting to get reliable, and up-to-date data. Getting accurate data, while getting it fast, is key to developing data driven strategies. We no longer have to live in the constraints of capacity, limited storage, and limited scalability. With the power and scale of the cloud, you can now simply just ship all of your IT/Ops data to a centralized location and share it among your teams.

IT/Ops shops often find some sort of niche tool that was designed for their purpose. These tools are fine, but they are often complex, and require specialized knowledge. Then sometimes you are faced with figuring out what data to ship. For example, some data tools might transform the data during the shipping process. Breaking it out into say a JSON doc, and then creating indices for these files, and tagging them with metadata. This made it even more complex as you would have to design all of these things up front, or suffer the rite of passage of remodeling your data ingest and re-indexing your data when you need to change something. Snowflake has solved these problems in a pretty straight forward way. With Snowflake, you just ship all your data. You can then use post processing to make the data more actionable.

Ship all the raw data you can, and then store that raw data in a schema with in the Snowflake platform. No need to spend countless hours of labor on this. You also do not have to worry about indexing, or tagging your data. You can just post process all your raw data with in Snowflake.

Before you Begin

When you get to the point of having multiple data pipelines into a data tool which you can easily share data from multiple sources of truth, you must convince yourself of the following:

You will probably be wrongThe data will show you when you are wrong(when you are right too!)Use the data to fix what is wrongFix things upstream, so next data ingest it will be fixed downstreamEstablish baselines, sources of truth and context to your dataData with out a source of turth or context is sometimes not as valuable

I really cannot stress this enough. I had to admit some methods I had been using for years, which I thought to be pretty solid, were in fact wrong. I also stopped treating MDM data as a single source of truth. When you think about it, this makes actual logical sense. MDM admins often only have the MDM tools to work with though, so they build deep knowledge and skills around these tools. So much, that it gives them Tunnel Vision

The big lesson learned here is that the MDM collecting third party agent data is at best a nice to have, but the actual source of truth

is cloud data from the agent’s SaaS. That is what matters the most in context. Not if some Launch Daemon is running, or an

agent binary can return stdout, or the plethora of other tricks us Mac Admins have come up with over the years to verify

if local agents are actually healthy. I found out through collecting data that methods I had been using for years really

were not as effective as I thought they were. I discovered I was wrong.

To define a healthy agent, that agent has to submit data back to its cloud tenant, and if the agent is not submitting data, then it does not matter what the local state data says.

So, now I have set my source of truth to the App stack each agent talks to, and the context of the data is around when was the last time that agent phoned home and submitted data? The rest of the data are just nice to haves, and sometimes that data is not as useful as we thought it was. All I had to do was build my context and request that set of data to the teams that owned that data, and they shared that data to me directly. Now I have the ability to query agent connection statuses from the actual source of truth of the agent, and I am not relying on MDM data to detect these failures for me.

All queries and data here are samples from actual data. While they are small sample sets, and things have been changed to share in this blog, the concepts are very real world applicable.

What Data Should I ship?

If you are new to shipping data, and aren’t quite sure what to ship, here are some high level starting points I recommend:

Asset Data(hardware, software, applications, etc)Event Data(webhooks, event based APIs, etc)Log Data(any logs that you need info on, or just ship all the logs)Tools Data(MDM, security agents, etc)

Use Asset Tracking Systems as a Source of Truth

At my current work, we have Service Now as our asset management

system. We track what Org assets are assigned to what human in this system. Also, we spent lots of effort cleaning up our

sub statuses of assets in Service Now. Things like classifying systems as primary, or as secondary. We have also

classified assets that are designated as Zoom Room computers. Leveraging data like this helps a ton on things you haven’t

even planned for yet. Anything you add upstream in Service Now, will be ingested downstream into Snowflake later on. So,

we use Service Now as our source of truth for asset tracking.

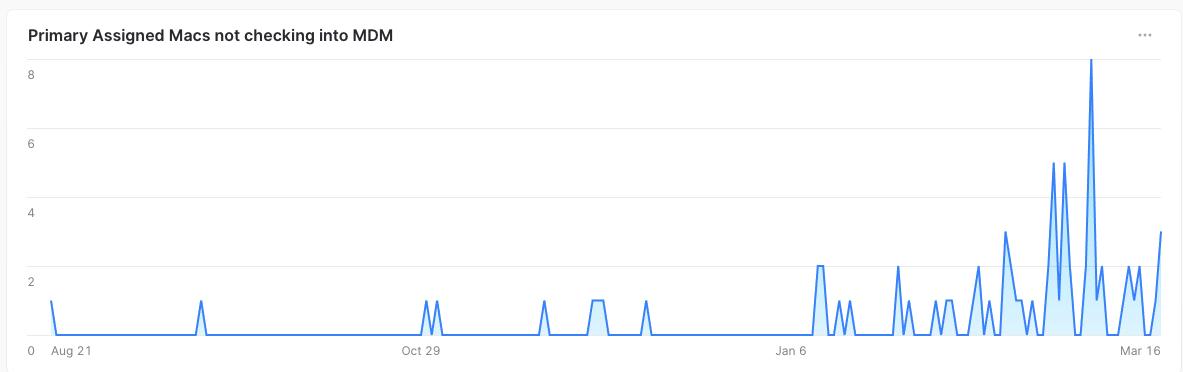

One example is that we query to check if primary assets are checking into MDM in the last 30 days. We chose 30 days as the metric for this simply because employees take time off. Plus there are holidays, and many other reasons an employee would not be working. However, it is probably extremely rare for an employee to be out of the office and offline for over 30 days.

Example Query: Primary Assets from Intune that have not checked in for over 30 days

|

|

Example Query: Primary Assets from Jamf that have not checked in over 30 days

|

|

Discoveries from the Data

All software agents can break, MDM clients can get into weird states, and even things like an OS update can cause issues. A lot of times you just need to reboot the computer to fix these too. This is just an easy way to report on them and make the data actionable. We are also using Service Now as the source of truth here and not our MDM services. This is really just because MDM solutions aren’t normally true asset management systems. Use the right tool for the right job.

Visualization Example:

What If Systems Are Missing From the Asset Tracker?

If you are an Org that allows BYOD, you might not have a great way to tell what devices are BYOD. Devices sometimes also may not end up in your asset system for whatever reason. With having multiple data sources in Snowflake you can easily join tables from these sources to craft such data sets. BYOD systems are probably not in your asset tracker as they are not Org owned. A failure also may occur in your process to import assets, or data was entered wrong. This is also pretty easy to solve when you have access to the proper data.

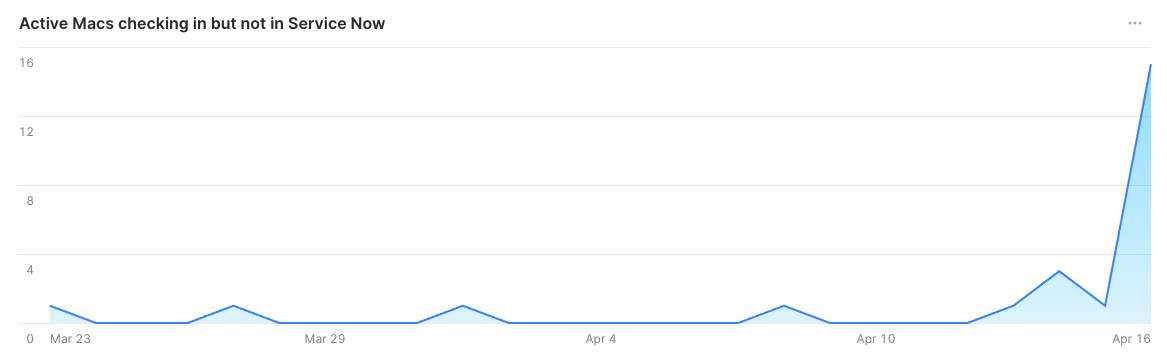

Example Query: Devices Checking into MDM but not present in Service Now

|

|

In the above query we are using each unique device’s last check-in into Jamf from the Webhook Event in the past 30 days, and we only need the event data for this. Then if we cannot join that unique ID (serial number in this case) to the Service Now assets table, then it does not exist in Service Now. You also now have all the serial numbers which you can use to create those asset objects in your asset tracker.

Visualization Example:

Discoveries from the Data

What we discovered is that most of the time when a device does not show up in Service Now, there is likely some sort of

data entry issue. Either our automation with our resellers had a glitch, or perhaps a human mistyped a character in for

an asset that was manually entered. We also discovered that sometimes if you scan a serial number barcode off of the box

the laptop was shipped in, it will append the beginning of the string with a S. On rare occasion we just missed getting

it in our asset tracker. My favorite discovery though by far with this data point, was that if a human enters a serial number

in Service Now with all lower case characters, it will fail on the table join. The matching is case-sensitive.

To fix this, I simply now sanitize my data in post processing by converting all serial numbers to all upper case with the

upper() function. This goes to show you how powerful this data platform is. Sure, we could have fixed our data upstream, but

will you ever be able to really stop humans from making simple human mistakes? Having this data allowed us to drill down into each edge case and failure point and discover why

it was happening. With the best part being is that we have the data to fix it!

Using Event Based Data to Monitor Jamf Cloud

SaaS is great! I love SaaS Apps, because as an IT/Ops person that means all the management of the App is on the vendor. I can focus on other things, and not have to worry about the app itself, nor the infrastructure required to self host the App. One of the very few downsides of using SaaS is that you often lack access to data. This is especially true when looking at operational data, or access to data that requires direct access to the host. In this case we will be using event based data shipped from Jamf Cloud Webhooks.

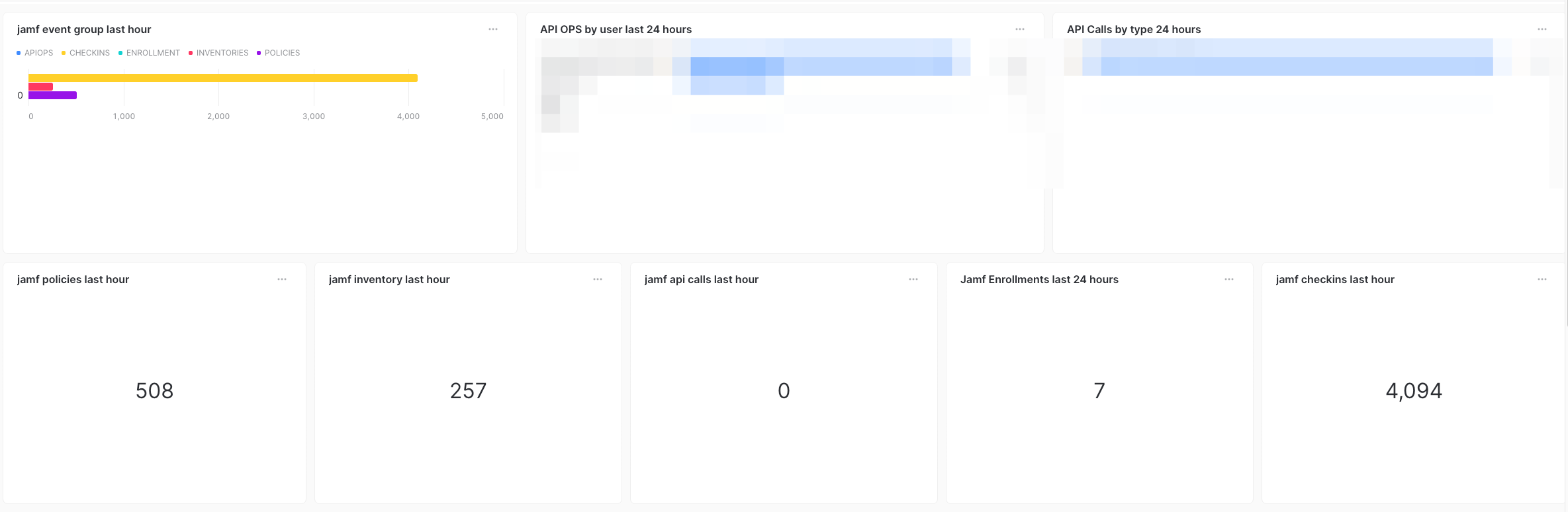

Example Query: Total Number of Events every hour

|

|

In the above query I am tracking the total count of each event type by the common events we want to track from our Jamf Cloud

instance. The above query also is tracking the number of events per the last hour, and you could adjust this part of

the query dateadd(hours, -1, current_timestamp()) to expand the time threshold.

Example Query: Total API Operations in last 24 hours

|

|

Caveat - Come to find out Jamf does not ship event hooks on failed API operations unfortunately. I have brought this to their attention and asked for this as a feature request. In this case I think tracking failures is more important than the successful operations.

Visualization Example:

Discoveries from the Data

This data is useful to monitor our Jamf Cloud instance. If events start to spike heavily in either an uptrend, or a downtrend

pattern, something is probably wrong. I did use this data to detect an impacted workflow from an API bug a year or so back.

Basically, an API call from JSS-Importer had failed updating a smart group.

The actual failure (after tracking it down) was that the Jamf API had timed out and returned a 502. However, it actually did

complete the PUT to the smart group, but failed to update the package payload of a policy. This resulted in a policy to

install one version of an app, and a smart group based on a different version of the same app. I only caught this because

my fleet was constantly spamming my Jamf Cloud with over 80,000 recon in a 24-hour period.

Security and Compliance Data

Every Org has a responsibility to their stakeholders, employees (or staff/students), and definitely their customers around security. This is a very big and encompassing subject. One could write entire books on this subject alone. So, for this blog post I am going to focus on disk encryption. Which is a part of the CIS framework, and a very common requirement.

Example Query: FV2 Status from Jamf

|

|

Example Query: FV2 Overall Score from Jamf

|

|

This will return the current FV2 status for every device record that has checked into Jamf in the last 30 days. Devices

that have not submitted inventory or checked into Jamf in greater than 30 days go into a separate violation, and will be

remediated in a separate flow. Any device returning Pending, Encrypting, and Encrypted are considered in a desired

state, and Not Encrypted will be used to calculate the percentage of devices in an undesired state.

NOTE: These queries do include an example of how to exclude say a service account that is used on non-human used devices that may not require full disk encryption for their specific use case.

Discoveries from the Data

This data was one of the easiest ones to address. Jamf will report 4 different states of disk encryption from a device

record. They include: Encrypted, Encrypting, Pending, Not Encrypted, and each of these is very self-explanatory.

In this data point Not Encrypted is the violation we are looking for. If devices stay in Pending or in Encrypting

for long periods of time, then we consider that a violation. The failure point is almost always FV2 was deployed, but the

user never rebooted, or in rare instance the MDM just failed to fully enable it.

Closing of Part I

This is still the beginning of my data journey, and I have much to learn and do still. What I would like to close with, is that having all these data points shared to me and my teams, has really changed the game. This data ends debates, answers questions, shows area of improvement, and is actionable. The last thing I would like to point out is that data drives collaboration. I partner with our security teams here, and we data share to each other. I share our fleet data with them, they share their security and agent data with me. Now when I engage with security, we both look at what the data is telling us, and we collaborate on it.