IT & Ops Data with Snowflake Part III

Gain Oversight of your Asset Inventory with Snowflake

Welcome to Part III of my mini blog series on being data driven with Snowflake. This post will be solely dedicated to how an organization can leverage a platform like Snowflake to improve their asset and inventory controls. Both Part I and Part II can be viewed with those links if you haven’t read them yet. This post will focus on end user devices for the most part and not networking hardware, application catalogs, servers, and so forth. The concepts in this post could be applied to those things though.

Every Organization I have worked for has struggled a bit with inventory of assets. It is not an easy task to just solve with a single tool or platform. Many aspects of Asset and Inventory control are also done with manual processes. Meaning, a human has to verify objects in asset trackers or change their states when physically verified. Some asset tools I wouldn’t even really consider products, but I would rather consider them platforms instead. This point of view comes from the vast amount of integration these platforms can have. With more integrations you will naturally have more data. This is where data cloud platforms like Snowflake can really enable IT & Ops teams to use data to drive change and improvements in their asset tools.

This is my personal opinion based off the experiences I have learned while working with data in Snowflake. There are always many ways to accomplish the same goals with data. So, this is not the only way one can be data driven around this topic, but my personal opinion this is a wonderful way to start.

Building Data Pipelines for Success

Inventory and Asset data is great by itself, as it can tell an Organization lots of information around their assets inventory. Inventory and Asset data is a lot better with friends. In a previous post I touched on applying baselines and context to your data sets, and this blog post will completely expand on that. Different tool stacks can act as a source of truth for the function is provides. MDM data for example can be the source of truth for all your active endpoints in your fleet checking in online. An Asset tool probably doesn’t have this data, and if you integrate it, you may end up with data lag. Remember, one of the key things about really being data driven is to get data quickly and reliably. If you have to wait days to get data, then you are days behind the current state of things. MDM data though is probably not really great asset data for several reasons. It probably does not tie into HR systems, and MDM systems typically do not support all the configurations around the many assets an Organization can own.

At a high level, this is my recommended starting point for data collection:

MDM Data- Event Data

- WebHooks

- Log data

HR Data- Employee Directory

- Employee Data (FTE, contractor, start date, end date, etc)

Asset and Inventory Data- Asset Type (laptop, desktop, Mac, PC, etc)

- Asset State (primary, secondary, test device, etc)

- Assignment Data (what human is assigned this device)

If you ship data from tools like the listed above, each will act as a source of truth for a specific data point in context. After you have this, you can start creating your baselines of what the data should mean. After you know it, you will be modeling your data and writing queries to get back the data you want on each point. Now, lets break down each data point and see how we can apply it.

Event Based MDM Data

Event based data is fantastic to get near-real-time data about your fleet. MDM tools that can ship Webhook Events, or any sort of logging that runs on a regular basis to indicate an asset has checked into your MDM tool and submitted data is pretty clutch. The MDM App data is the source of truth for active online endpoints in your fleet that are online and properly communicating to your MDM. Any MDM that can ship event data is pretty key here, if your MDM vendor does not support this I would recommend you file a feature request with them to get this data. It has a lot of value.

In this blog I will use Jamf Pro Webhooks as my event based data set. The specific

event we care about is the Iventory Event as this event is only generated when a device submits data to Jamf. Devices that

do not submit data are considered in a broken or undesired state. Devices not submitting data can easily be put into a different

data set, with a different context.

The WebHook data must contain some sort of primary key in it for it to be

valuable. Typically, a serial number is good enough, but the more Unique IDs a hook can ship the better. That would give

the IT & Ops teams more options to work with. The primary key will be used to run joins

to match different data sets you share with in the Snowflake platform. You should ingest these WebHooks as often and quickly

as possible. They are typically very minimal, so you can store them historically for a very long time.

HR Data

Most Organizations have some sort of Employee Directory, which includes some basic information about each employee that is shared throughout the entire Organization. This data should also be available in Snowflake, and it is very useful for many applications. HR data is often highly protected, and a lot of it is considered to be PII data. So, you must do your own due diligence on working with privacy and legal teams to ensure you are sharing allowable data. Things like department, email, start date of work, end date of work, manager, and the like is pretty much all you need to enhance your Inventory and Asset data assessments.

There is likely an integration to ship this data in your HR tools to some sort of cloud storage. If not, you might have to roll your own integration via an API. Admittedly, HR systems are an area where I do not possess deep knowledge on, as I have never really had to work with them a lot in my career. Remember you can create tables and views in Snowflake that allow data owners to share only the exact data they want, nothing more, nothing less. I wrote about this in Part II. So, HR will still have all the controls in place with in Snowflake to safely and securely only share the minimum data needed for this.

Inventory and Asset Data

This will be short and sweet. Ship all of your Inventory and Asset data to Snowflake. Yes, every single bit of it. Every CMDB table, every event hook, every API endpoint, so on and so forth. If you start off only shipping bits and pieces, you will likely end up in a situation where you don’t have all the data you really want and need. Then you will be constantly creating more data pipelines. So, do yourself a favor up front and just ship everything.

Turning the Data into Magic

Assuming that you have all these data pipelines in place, the data is shared, and you can leverage it to build data sets

for intelligence we can start to dive into it. In the beginning the data will likely look bad, and do not

worry about this. This really should just be expected. If you have never had this data before, you were in the dark about the

overall state of it all. As one of my favorite books stated, "Don't Panic!" You shouldn’t have an expectation that everything

you were doing was correct. The data will turn into magic you can leverage down the road to fix all the gaps in your

systems and processes.

The data will tell you a story of what you are missing. One of the first things I would establish is some baseline expectations. Something simple, like that every full time staff member is required to have a primary asset in your asset tracker. This is a great place to start, and it might require you to change how you approach asset management. Devices you deploy to end users should have explicit definitions of what the asset is, and this is why I think requiring every full time staff member having a primary asset is a good place to start. Secondary devices could be allowed, but the expectation of a secondary device is that it is used less frequently compared to a primary device. If we define these states in our asset tracking systems, it is quite trivial to leverage that data in Snowflake.

Establishing Baselines and Context

When you think about managing your fleet from an asset and inventory perspective, it helps to define a few things to establish some baselines. This helps you define the data sets which you can ingest into Snowflake. These things also can help define process as well. Here is a table of some examples one could use in their asset tracking system:

| Status | Definition | Notes |

|---|---|---|

| Primary | Primary work computer | Every staff member must have a primary work computer assigned to them |

| Secondary | Secondary work computer | Staff may request a secondary computer if they need one |

| Conference Room | Devices that are used in conference rooms | These devices can be seen more like appliances and not really end user computers |

| Lost | Devices that have been lost | Lost devices should be marked as lost and follow the lost device process |

| Stolen | Devices that have been stolen | Stolen devices should be marked as stolen and follow the stolen device process |

| In-Transit | Devices that are being shipped or received | Devices that are in the process of being shipped |

| Out for Repairs | Devices that have hardware failures | Any device that needs hardware repair should be marked as this |

| Testing | Devices that are used for testing | Test devices are often in weird and non-compliant states use this state for devices you want to filter from compliance. Think like a laptop running beta software. Test devices should never be used as a production device |

| Retired | Devices that are no longer being used by anyone | Using a “Retired” status means you can track the historical data and filter it |

| Disposed | Devices that have been officially eWasted | This state could imply you have disposed your old hardware with a certification of the data being destroyed |

With the example table above, we can now use these data points to make much safer assumptions. We can assume that actual

end user computes will only ever be either Primary or Secondary statuses, and we can leverage that in Snowflake. While

Conference Room devices we can safely assume those devices are only used for hardware needs in the conference rooms. The

Testing status I think is also important to track. As an IT & Ops person myself, I often have a few tester laptops in my

possession. These laptops run beta versions of macOS, Windows 10, various tools and applications in preview or beta release

as well. This means my tester laptops are probably never in compliance, and a high probability they are in a non-working state.

Test devices aren’t meant to do production work, they are meant to be used to test new software and configurations.

If our baseline consists of every employee being required to have a primary work device, we can use this in the data. How you establish these baselines and definitions is very much up to the Organization itself to decide. There aren’t really any right or wrong answers here, just answers that work best for your Organization. This also helps establish your asset tracker as a source of truth for these asset data sets. It is important to decide which data source is the source of truth, and your asset tracking system should be the source of truth for your assets.

My team has engaged with our Service Now team to help define these asset states in Service Now to make our data more actionable. It also helps you as the IT Ops professional make easier use of the data.

Putting the Data Together

With Snowflake, we can use the power of SQL, and execute queries with joins and leverage data sharing features. Let’s look at a query example:

|

|

The above query is a method we leverage to find devices that have somehow failed to get into our asset tracker. We all know failures happen, and if we can catch them and fix them, then typically everything is all good. When we do not catch them, and then an incident happens weeks or months later, it does not reflect well on IT. A lot of times adding an asset into your asset tracker is a manual process, or if there is automation with a reseller/vendor, sometimes that fails, or they make a mistake. I have observed all these edge cases in the real world be actual real failures. MDM webhooks will ship to Snowflake each time the device phones home, so this is where event based data is amazing to have. You don’t have to wait for workflows or code to run, just simply wait until the next time the device checks into MDM, and the event will be shipped to your data platform.

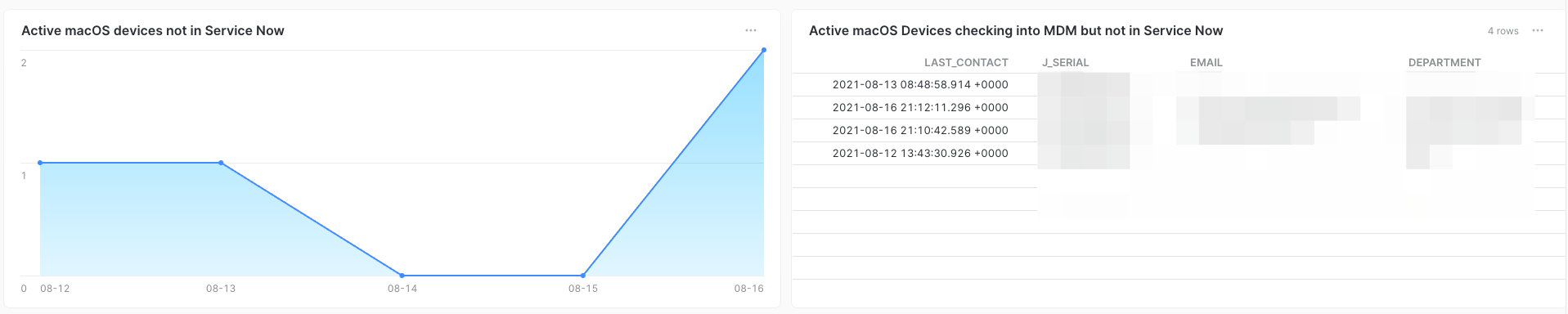

Visualization Example:

The data isn’t just valuable for insights and metrics, it is extremely valuable to help you find gaps in your tech stacks. Typically, everyone is happy when you are proactive, and this is exactly what we are doing in IT/Ops with data in Snowflake.

Reducing the Noise

Once you put in some effort into cleaning up your asset tracking systems, and using the data to help guide you, you will find

out the return on this investment is quite large. Using the examples from the table above, I now know that actual employees

will only ever have a primary, or a secondary computer. Put in the effort up front to improve and sanitize your data inputs,

and then reap the benefits downstream when your data gets ingested.

Filtering Example

|

|

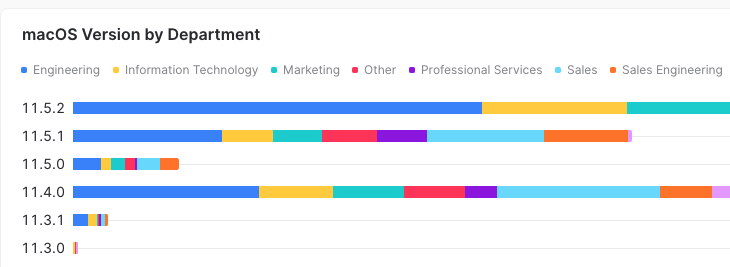

In the above query, we can now reap all the benefits of using data to fix your asset tracking system and get actual real numbers of devices by type and context. In IT Operations, you are typically responsible for patching the OS. SLAs are also attached to patching the OS many times. So, we know that IT must patch all primary, secondary, and conference room computers. These are the computers that have been defined contextually as in use and in production. MDM solutions have no knowledge at all of your asset systems, and your MDM doesn’t understand that perhaps not all devices are actually used in production workflows. What we are accomplishing here is the following:

- Devices submit inventory to MDM

- MDM will ship an inventory webhook when the device submits data to MDM

- Join that data to Service Now to ensure it is a production use device

Note: There is also a bit more going on here. A lot of times you might use a service account for a conference room computer, or just a computer that is not used by an actual human. Service accounts don’t have departments, or belong to Orgs/teams really. So, to mark this in our visualization if you detect the service account and the department info is blank then assign that device as a

Conference Roomcomputer as the department

Tracking Broken MDM Clients and Usage

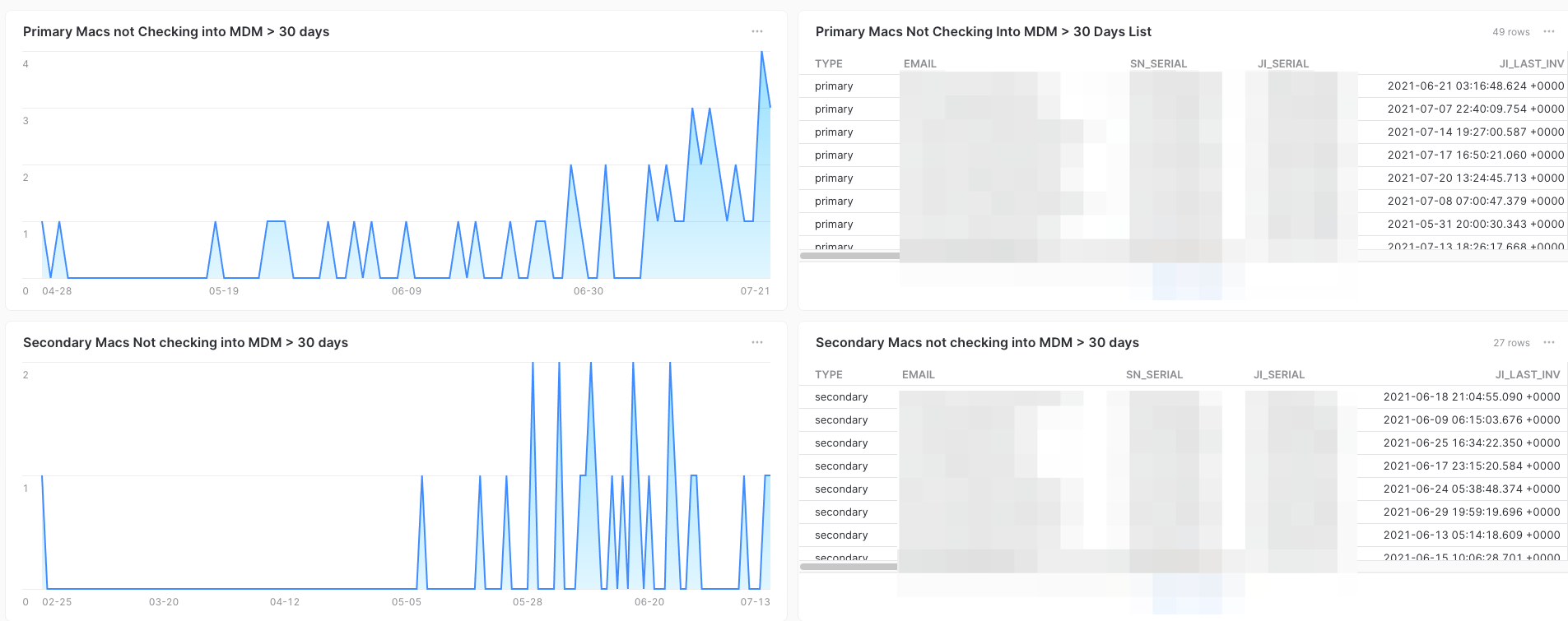

Everything has a failure rate in technology. Nothing runs at 100%, even the most highly available and scalable systems humans have ever created cannot guarantee you a 100% uptime. Compound this with secondary devices it adds complexity to the situation. People sometimes have legit business reasons to have more than one computer, and IT should help the people at your Org be as successful as possible. So, how do you track primary and secondary device usage? How would you track when a device stops phoning home to MDM and needs a re-enroll? What if I told you that you could accomplish both of these problems with a single query and a dash of data sharing?

|

|

NOTE: This query is one of several where we made separate dashboard tiles for each status in Service Now. So, you could edit this query to add more states and have a different visual effect if desired.

The above query does a few things. It pulls in our MDM data, joins that to service now to ensure we are looking at the devices we want to look at, and then goes even further and joins to HR data to ensure the devices is from an employee that is still actively employed. Why filter out exited employees? So, my philosophy is you never throw away data, but filtering data into specific buckets makes a lot of sense. It makes the data easy to digest and visualize as well. So, while I am only querying devices that are from actively employed employees at my Org, I am also filtering out all exited employees in an entire different set of tiles and data sets.

Visual Example

Another thing we leveraged from this data, is that it proactively helps us check back in with folks that have secondary devices. Many times an employee just needs a test device to test out a few things for a short period of time. Tracking this data in Snowflake allows us in IT to check back in when the device stops checking in to see if the person still needs it. Many times they are actually done using the device for their secondary purposes and will gladly turn it back into IT. Using Webhook Event data from our MDM tools, along with Service Now data, has allowed us to remediate when things break. It allows us to know very quickly when something is missed. Without a centralized data platform with data sharing I am not sure how one would accomplish this without putting massive amounts of effort and custom dev work into it.

Concluding Part III

During my data journey so far in IT, I have learned a lot, changed a lot of my views, and used the data to change things with in my organization and collaborate with other teams. Having solid asset data is important for not only audit purposes, but also for it being available to be leveraged. Tracking the failures and gaps we have with the data in Snowflake is invaluable, as it allows us to be proactive and fix data in our asset tracker all the time. The better our data is in our asset tracking systems, the better it is for everyone to use and leverage. It helps cut out the extra noise and enables your Org to focus on the specific problems at hand.

I think this will probably conclude my miniseries of the beginning of my data journey with Snowflake. I will definitely write and post more content, but I feel this is a good spot to end.