Servicenow Asset Data in Snowflake

Inventory Asset data is extremely important to any organization. It is also some of the most difficult data to keep accurate and up-to-date. We have been shipping ServiceNow data to Snowflake here for a good while. Over the years the asset data has proven time after time to be extremely valuable. It helps filter out tons of noise and allows one to focus on specific asset data at any given time. Just by creating some simple queries and joining ServiceNow data with other data sources. I recently created a new view in Snowflake to help us better filter data down to more specifics.

Benefits from Data Upstream

ServiceNow is a platform, which can host many applications within its ecosystem. You can have all sorts of workflows for

different teams, ticketing systems for various departments, knowledge base articles, and of course asset inventory. When

the ServiceNow developers or admins create new features or integrations within the application upstream, you can also have

that data downstream. For example, I engaged with our ServiceNow team not too long ago to integrate with our HR system

so if anyone was on a leave of absence we could mark their asset as LOA in the asset tracker in ServiceNow. This allows

teams downstream consuming this data in Snowflake to easily filter all leave of absence devices into their own bucket.

As a general philosophy, I tend to never get rid of data, but rather filter it into contextual buckets where it makes the

most sense.

Another thing we also got to benefit from, is that when someone marks an asset in ServiceNow as a VIP asset, we know it

belongs to a VIP end user. These are executives, department heads, VPs, and other important people that might need more of

an executive IT support model vs a standard IT support model. It also allows us to track VIP computers specifically, so we can

ensure they have the latest security patches. These type of enhancements that your ServiceNow developers and admins can

build within ServiceNow allow many other teams to benefit from this data downstream.

Modeling the Data

So, now that I have this new data added upstream by my ServiceNow team, I can easily just consume it downstream in Snowflake. Since we already have data pipelines coming in from the CMDB tables, when the person that developed these enhancements moved the changes to prod, the next time the data ingested it showed up in Snowflake. Now, I wanted to take advantage of this, so I created a view joining these tables together into a single spot within Snowflake we can consume this data from.

example SQL code:

|

|

- CMDB Tables in ServiceNow have a unique ID referred to as a

sys_idthat can act as a primary key - When data modeling, try to keep the view specific and avoid joining every data source into a single monolithic view

- When updating a view, you may want to use copy grants

- Always ask your local domain experts for guidance, don’t assume what data means

ServiceNow is a massive product, with 1000s of database tables, and it takes a lot of time and effort to be an expert on it. I am very far from an expert, so I engaged with my internal experts to help understand and model this data. They were able to help me understand what the data means and how to best model it.

Leveraging thew New Asset Data

We have an IT Remediation project, where we meet once a week and review our data and dashboards. A lot of things have surfaced from this meeting. We go through the data and dive into it, validating that is correct, and pointing out where we can enhance it. Our colleagues in the EU asked us to add geolocation to them, so they could easily identify assets in their region they supported that were out of compliance as an example. This shifted us into be proactive, but also we were validating the data was good. Before our support staff were manually filtering out device location to divide and conquer IT support projects. Now that data is joined into these dashboards.

I feel this important because it will start a culture of looking at data, checking if the data is accurate, identifying gaps and enhancements, and it really helps with cross team collaboration. We recently heavily campaigned our fleet to all update to the latest version of Ventura. We combined the geo data, MDM data and all those upstream data enhancements we added to ServiceNow asset data.

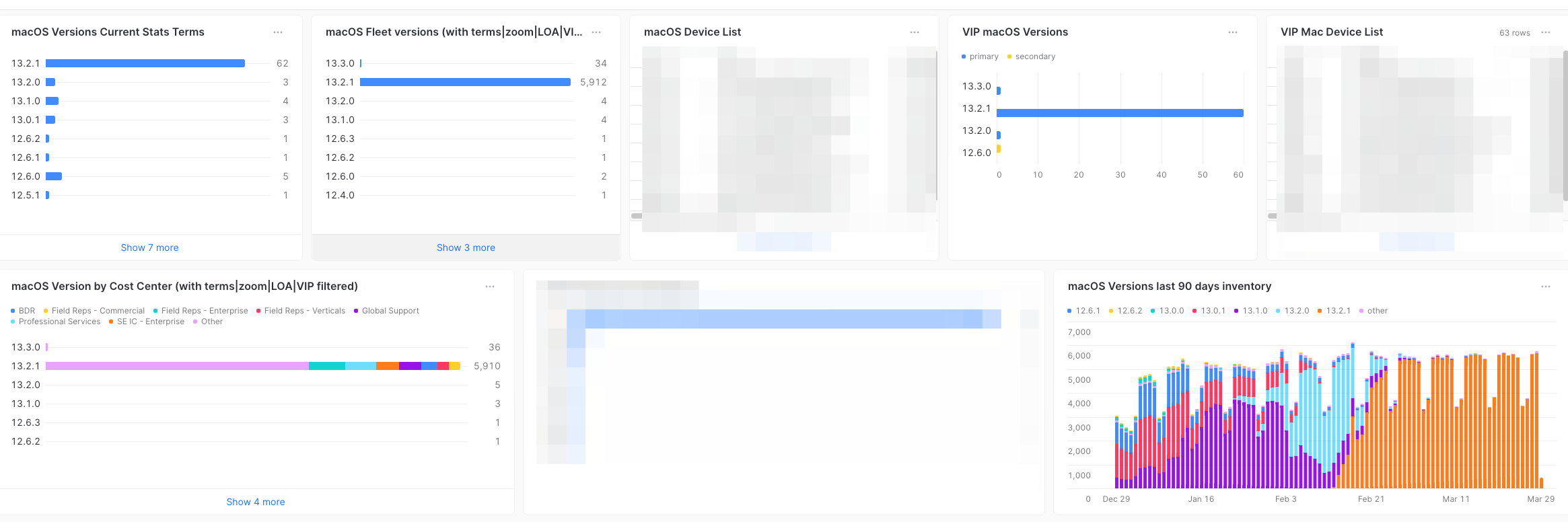

In the above dashboard we first started out with just webhook data from our MDM (jamf pro) and were tracking active macOS devices current OS. This was an okay place to start. However, once we were able to add the ServiceNow Asset data we were able to really gain much more insight much faster. Then over time we enhanced this dashboard with the following asset data from ServiceNow:

Asset function: primary, secondary, testing, conference room, etcAsset status: in-use, out for repairs, in-stock, retired, etcIntegration data: leave of absence, vip usersAsset assignment: what human has what device

Since ServiceNow is the source of truth for asset assignments, this allowed us to enrich the data even further and use the email address of the assigned human and join that to HR employee directory data. This is how we were able to look up where the employee worked out of, and thus gave us the ability to easily filter by geolocation so local employees could better support their region of users.

Imagine being a manager or an individual contributor(IC) for an international company and be able to easily filter out the regions you support, and review those specific device’s data by just checking a dashboard? Managers can get high level overviews and ICs can proactively identify technical issues and set plans to address them.

Same Old Problems

Many years ago I was scanning thousands of Macbook Air laptop serial numbers from a big laptop deployment my team and

I were doing at a previous job of mine. We quickly noticed that the barcode we scanned would append the letter S as the

beginning of the serial number. We programmed the scanners to ignore the first character and that fixed it. Well, this

is one of those human error problems. Humans might forget to program their scanners sometimes. So, we can use data in Snowflake

to audit and fix our asset data.

Quick query to get serial numbers that start with S

|

|

Another classic problem is how do you monitor your asset data to ensure you did not make a human error and forget to add assets to your asset tracker.

|

|

In efforts to ensure our asset data is good and accurate, we can simply use not exists in Snowflake to compare serial

numbers of actively checking in devices that do not have valid asset records in ServiceNow. This example is a webhook

event from our MDM, and we check if the serial number of that device exists. That is it, that is how simple this check is.

Asset Data is Important

Asset data accuracy is something that is so tough to keep up with. Assets change states when they go out for repairs, a hardware refresh, get returned, as each one of those states follows a process that involves a human doing work. Asset data is also some very important data that really needs to be always accurate. Having your hardware asset data in a product like Snowflake gives you avenues to actually actively test and ensure your asset data is accurate and audited. We find mistakes all the time, and we find them the second the data is ingested into Snowflake. The data it provides can be joined to other data sources and build insights and metrics off of it all. This data is used across many teams for many purposes. Security will want to vary their priorities based on people and teams, and with asset data they can do that. Teams that have more privileged access generally yield higher priority alerts. The asset data being accurate is a must for these things. Asset data gives us the person who the asset is assigned to, and we join that across tons of different data sets.

We also were looking at secondary device data a lot over the past few years. There are times when someone might need another computer to perform work on in addition to their primary device. So we issue them another device to help them do their work. However, how do you know when the secondary device is no longer needed? If a secondary device is not needed anymore, and it is not online, it is most likely not getting patched either. Well, that asset data tells us the serial number and the human tied to that device, and we can join it to MDM or agent data. From here we can simply deduct if the device is not checking into our MDM servers it is not coming online, and likely not being used. If we detect a secondary device is not online greater than an arbitrary timeframe (30 or 60 days, etc) we can trigger workflows that will start a process to see if we can get that secondary system back.

These are just some reasons and use cases I feel outline the importance of asset data and how having that in a tool like Snowflake can really help boost accuracy of your asset data. Thanks for reading!